Today, i am here with a new Topic of blogger that is "How to add Custom Robots.txt in Your Blog". But first we will discuss a Little about it. I know you have a question in your Mind that is What is Custom Robots.txt and Why we use it.

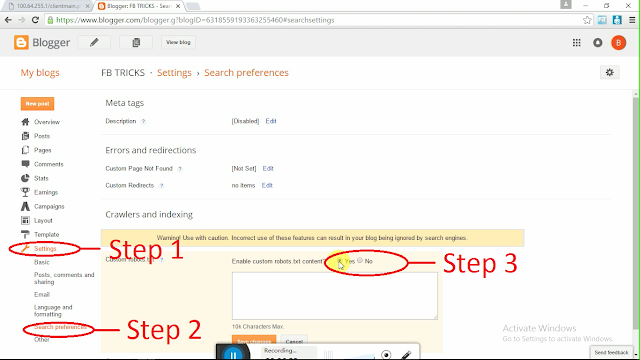

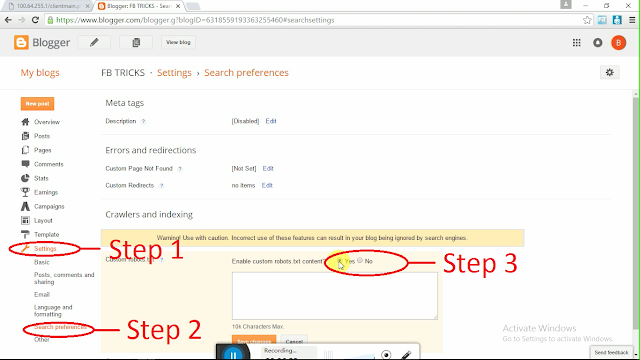

2. Click on Settings >> Search Perferences >> Edit >> Select Yes.

3. Copy the below Code.

User-agent: Mediapartners-Google

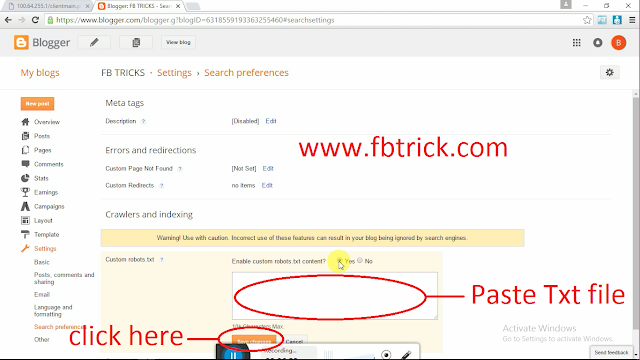

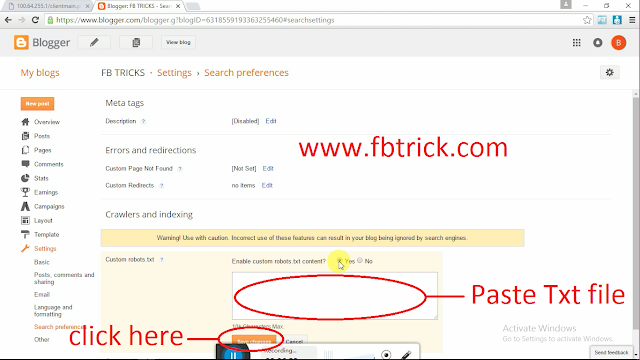

4. Paste the Code in Blank field. Now Change http://example.blogspot.com with your Blog URL and click on Save Changes.

You Are Done. Congratulations..

What is Custom Robots.Txt And Why we use it?

Robots.txt is a text file which contains few lines of simple code. It is saved on the website or blog’s server which instruct the web crawlers to how to index and crawl your blog in the search results. That means you can restrict any web page on your blog from web crawlers so that it can’t get indexed in search engines like your blog labels page, your demo page or any other pages that are not as important to get indexed. Always remember that search crawlers scan the robots.txt file before crawling any web page.Follow the steps and add Custom Robots.txt file in Your Blog

1. Login your blogger account.2. Click on Settings >> Search Perferences >> Edit >> Select Yes.

3. Copy the below Code.

User-agent: Mediapartners-Google

Disallow:

User-agent: *

Disallow: /search

Allow: /

Sitemap: http://example.blogspot.com/feeds/posts/default?orderby=UPDATED

You Are Done. Congratulations..

or

Watch our video and see How to Add custom robots.txt file in your bloggers blog.